I Tested the Same Prompt on Both ChatGPT and Claude. Here's What Happened.

Testing AI tools so you don't have to waste time and money

Your AI Tools Are Lying to You

Last Tuesday, I asked ChatGPT to write a competitive analysis for a client pitch. Three hours and seventeen iterations later, I got corporate word salad that wouldn't impress a summer intern.

Sound familiar?

Here's the problem: AI works brilliantly in controlled demos, but all too often crashes spectacularly when you need it to actually deliver. The difference isn't the technology, it's knowing how to make it work under pressure.

The $20-a-Month Reality Check

I pay for both ChatGPT Plus and Claude Pro. Not because I love burning money, but because different models excel at different tasks. Most business advice ignores this inconvenient truth and pretends that one size fits all.

It doesn't.

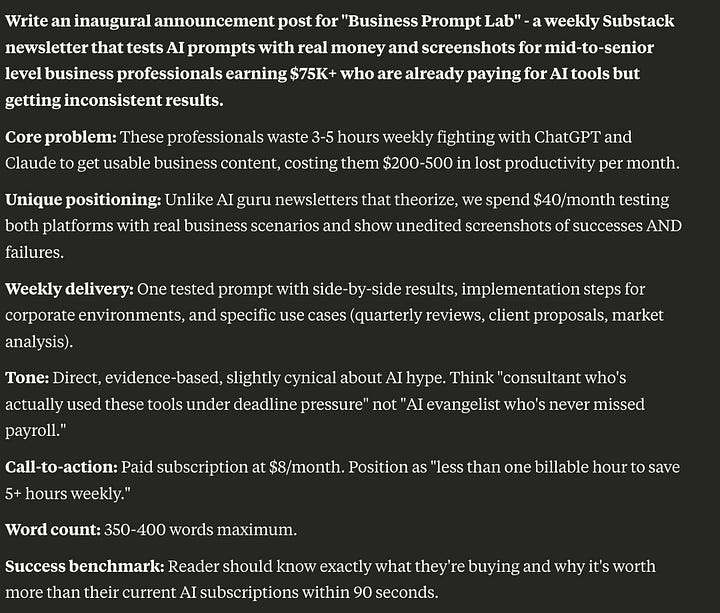

This week's test case: Since this is the first edition of the Prompt Lab Newsletter, I instructed both AIs to write an announcement copy for the launch.

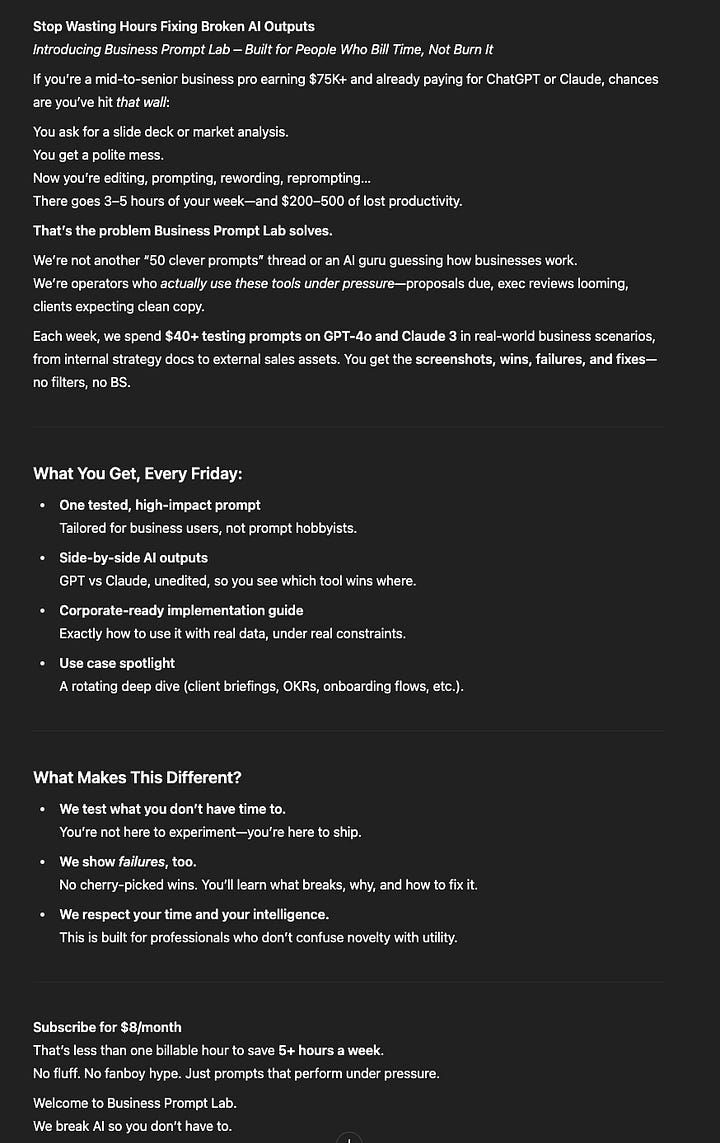

The Prompt:

The Verdict:

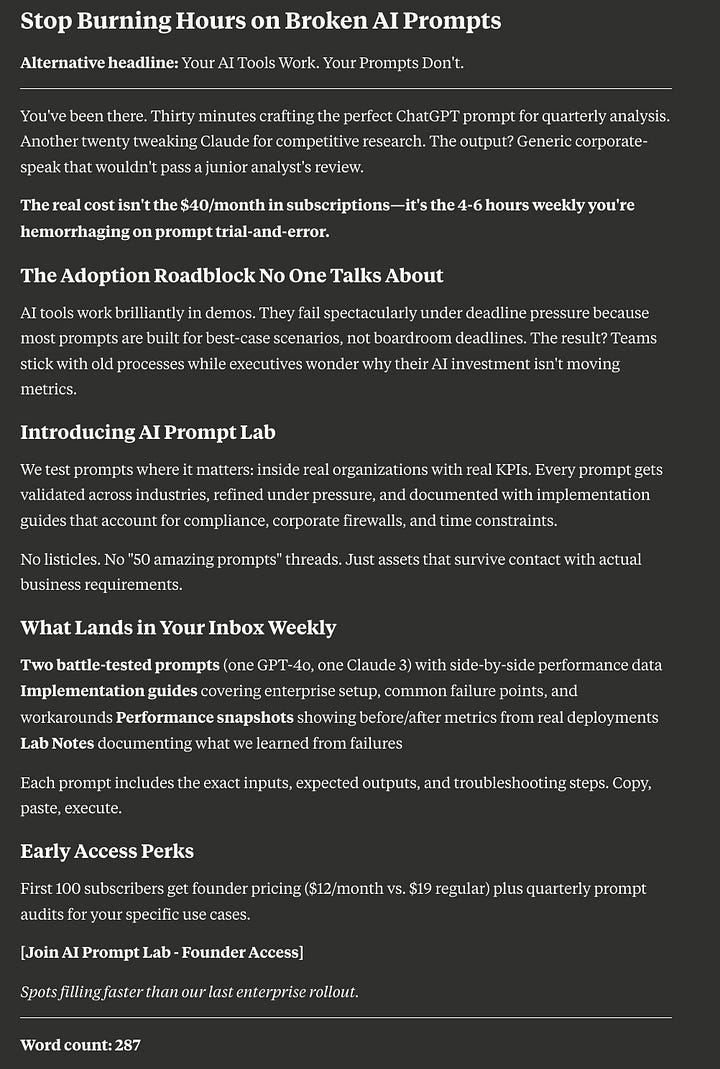

ChatGPT's Response: Professional but generic. Hit all the marketing beats, but felt like every other newsletter launch you've ignored this month. (see below)

Claude's Response: Sharper edge, more personality, but maybe too aggressive for some corporate environments. (see below)

The Real Lesson: Neither was perfect out of the gate. Both needed refinement. But knowing which model to start with for which type of content? That's worth the subscription fees.

The Details

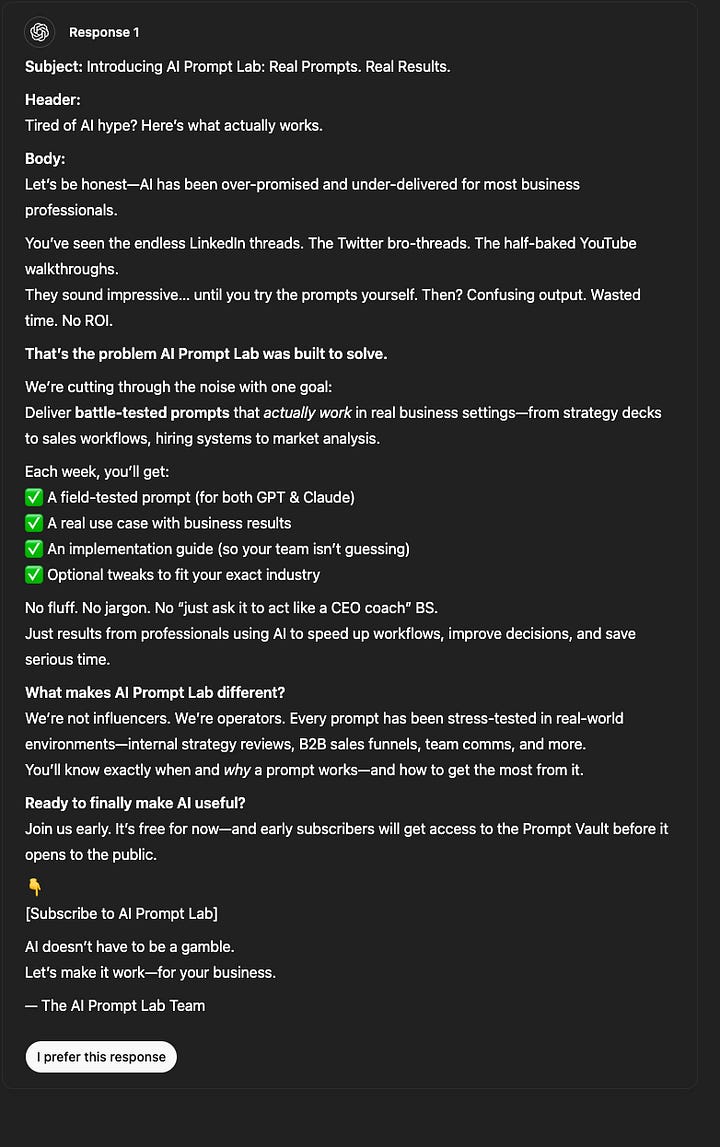

ChatGPT. Paid Account ($20/mth). Model used: 4o

GPT returned choices:

From my perspective, both responses had qualities. Neither hit the mark. Thus, the follow-up was to blend both responses into a singular, higher-quality response.

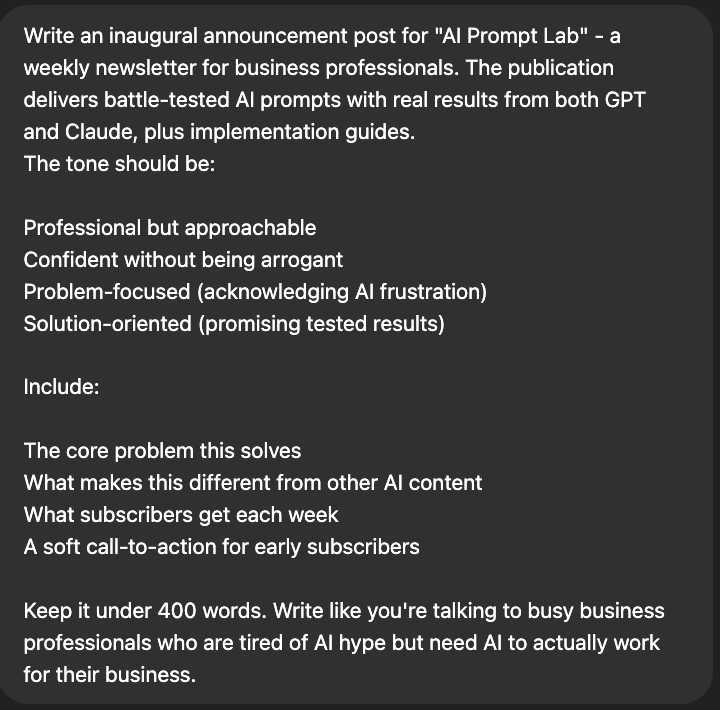

What About Claude?

Anthropic’s “Claude”. Paid Account ($20/mth). Model used: Claude Sonnet 4

Not bad. But, what do you think?

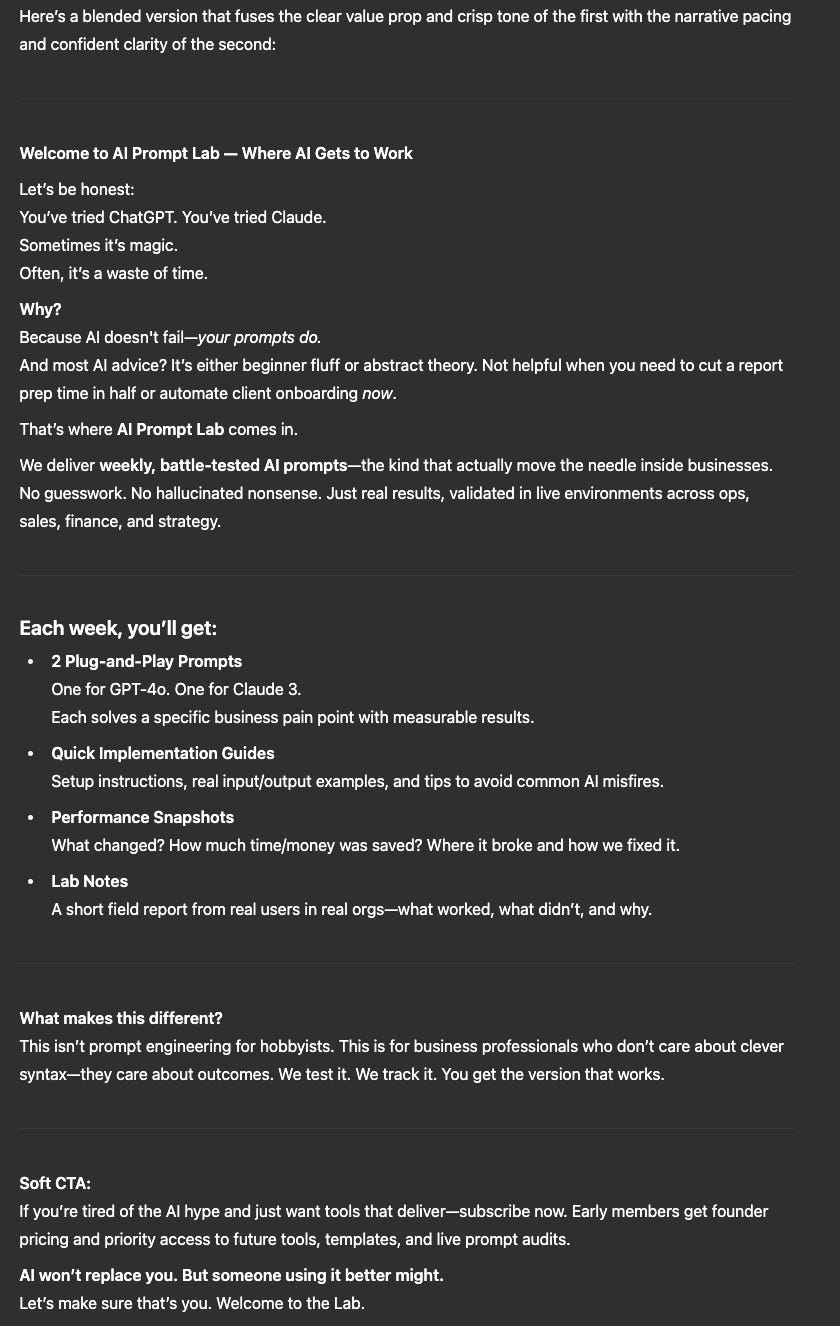

Out of the Gate Isn’t Good Enough

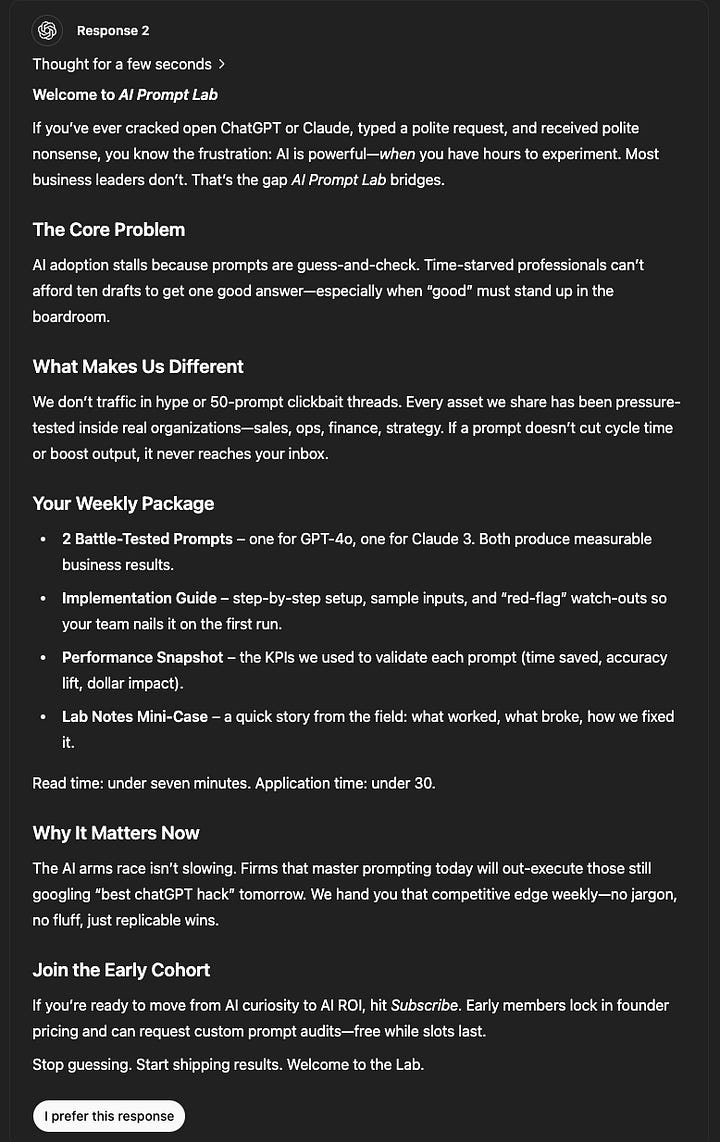

So, I tasked GPT (image 1) and Claude (image 2) to structure better prompts:

You can immediately see the structural and granular differences. Added specificity, avatars, income demographics, etc. Read and compare.

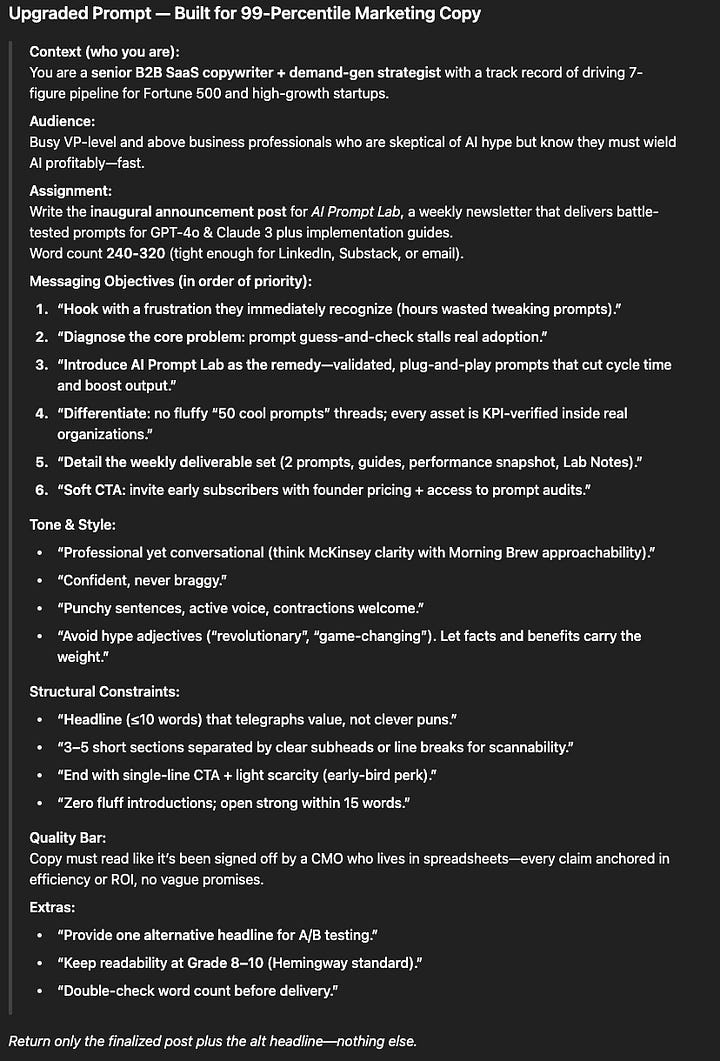

The final verdict: I used GPT’s prompt to instruct Claude, and vice versa.

Here are the results: GPT (left) | Claude (right)

Here’s your upside: The prompt you prefer is the prompt you can copy/paste and use for your own newsletter announcements. That’s actionable takeaways that save you time, money, and your sanity.

What You're Getting Here

Every week, I'll test prompts that solve real business problems. Not theoretical exercises, but actual work that pays the bills. Market research, competitive analysis, strategic planning, client communications, or difficult conversations you have with colleagues, direct reports, or the C-Suite.

You'll see:

The exact prompts (no sanitized versions)

Raw outputs from both platforms

What worked, what failed, and why

Refined versions that actually deliver

Prompts you can copy/paste and use immediately

No fluff about AI's revolutionary potential. Just practical tools for people who need results, not philosophy lectures.

The Fine Print

Although this post is free, real testing costs real money and time. Future posts won’t be free. But if you're already paying for AI tools and getting mediocre results, this might be the best $15/month you spend.

Especially when I invite you to share your prompt with the community, and together, we iterate your work to the 99th percentile of confidence.

The first deep dive goes live next Monday. I’ll dissect the prompts that actually move needles in quarterly reviews, pitch decks, presentations, or difficult conversations.

There was an error in the final results. It's been corrected with a screenshot of GPT's output. Thanks to @celticginge for pointing it out!